What do we mean when we say data ingestion? Essentially it’s introducing data from new sources into an existing system or process.

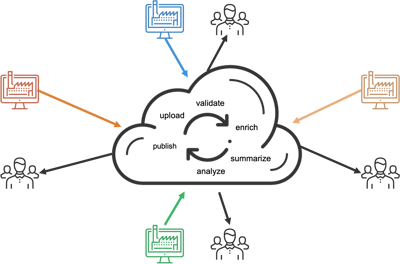

The ingestion process usually requires a sequence of operations, from retrieving the data to parsing it, validating it, transforming and enriching it, through to loading and archiving.

The data is often characterized by the fact that it’s coming from third parties (often customers whose data we’re onboarding), and is of an unknown, inconsistent format and quality – and it’s this that can make ingesting that data challenging.

We need to build data ingestion pipelines that can perform all the steps needed to ingest the data, as well as accounting for inconsistencies and adapting to whatever new data comes in – and ideally doing it all automatically.

What features should you look for in a data ingestion tool?What is data validation?

Data validation is the process of ensuring that data has undergone some sort of cleansing or checks to make sure the data quality is as expected and the data is correct and useful.

Where should you do data validation?

The somewhat-unhelpful answer is that you should perform these checks wherever in the pipeline it makes sense to validate the data. And that can change depending on the type of pipeline you’re working with. Typically data validation is done either at the beginning or the end of the process.

We also need to decide at what level we should validate our data – at the record, file, or process level (or a combination):

- Process level – is the process itself working as expected?

- File level – are the files we’re receiving what we’re expecting?

- Record level – are the details in each record correct?

The challenges of scaling data validation

Bad data often occurs as a percentage of your data – so as the volume of data you’re dealing with scales up, so does the amount of bad data you’re having to detect and filter out.

Data validation can also become challenging when you’re having to manage lots of data sources. Ideally you want to handle all your data ingestion in one pipeline, even when your sources vary – you don’t want to build and maintain different pipelines for each source.

And it's important for reliability and consistency that your data validation should be automated.

What happens after the data is validated?

To keep the automated ingestion process flowing, you need to decide what happens after your data is validated.

- Do you keep processing the data or do you fail?

- Do you fail the record, or the entire ingestion process?

- Do you keep processing and log suspect or invalid data?

- How do you present the validation results to provide actionable insights?

Common goals for automated data validation

- Reduce the burden on clients: You want to make it as easy as possible for your customers to give you their data. Which means you not only have to be lenient in the formats you expect but you need to be able to:

- Fix common errors without manual intervention

- Inform clients early on if there are issues that need fixing (i.e. before they’ve put more and more bad data into the pipeline)

- Provide robust reporting on the data ingestion process: Even if your data is passing quality checks, you still want to see reports on it so you can increase confidence in the data quality, and so you can see trends in quality. For instance, if you’re getting more errors on certain days or with certain sources, you can investigate and fix problems before they become severe.

- Empower less-technical staff to see and take action on validation results: Giving less technical staff (e.g. your customer onboarding team) the ability to correct issues and reprocess data themselves not only saves the time of your development team but also generally means a faster, more streamlined onboarding process for your customers.

- Designing for resilience: Being able to handle variability in input format - whether client by client, day by day, or any other factors - without needing human intervention, also speeds up your onboarding process and makes it easier to scale.

- Orchestrate the complete end-to-end ingestion process: The more of the entire data pipeline you can automate, from detecting incoming files to post-processing reporting, the more time you can save and the more data you can handle. (Not to mention minimizing human error).

- Reusability: Design your ingestion process so onboarding a new client doesn’t mean building a new pipeline. Even if your sources, data checks and business rules change, you can use the same pipeline – allowing you to scale faster and with less effort.

See how to build automated data validation into your pipelines with CloverDX

In the second part of this post, we walk through what these data validation steps look like in a data ingestion pipeline built in CloverDX.

Data validation in CloverDXYou can watch the whole video that these posts are based on here: Data validation in data ingestion processes.