Integrate applications' data with your ETL processes: with Data Services from CloverDX you can develop REST APIs using CloverDX graphs. Data Services are built into CloverDX Server so you don't need any extra modules, servers or dependencies. Develop, publish, call... profit.

API-Based Data Integration

API-based data integrations have become more and more popular in recent years. We’ve seen CloverDX on the client-side of API for quite a while now: calling and orchestrating SOAP or REST services as part of some data crunching workflow is nothing new.

But now we're introducing the server-side as well: allowing systems to interact with Clover graphs directly, over API calls.

At the end of the day, when applications talk to each other, there’s always data present: in URLs, in headers and parameters, in payloads, HTTP requests and responses, etc. And that data needs to be manipulated —parameters need to be calculated, requests generated, responses parsed and pushed down stream.

Data and transformations! Sounds like a job for Clover, doesn’t it?

Transformations accessible as REST APIs open up interesting possibilities for how systems can talk to CloverDX. Or, how systems can talk to each other through CloverDX. Let’s see what some of those possibilities are...

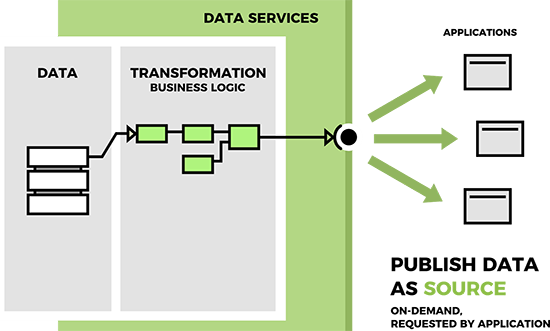

Option 1: Publish Data Source via API

Imagine a bunch of applications that need to pull data from some external storage, or maybe from another application. Chances are the data isn’t in a shape readily usable to those applications—you need to transform it, change the format, maybe augment with other sources, and so on. In theory you could modify your applications to understand that external storage and pull and reshape the data on their own. This calls for development time, creation of unwanted dependencies and duplication of the business logic should the data in question be used in multiple places—all of which you should avoid.

Data Services offers a much more elegant solution. Put a middle layer between your apps and the data, and this layer will take care of turning the “foreign” data into the right shape every time one of the applications asks for it. How? You simply take a CloverDX transformation and publish it as an API endpoint.

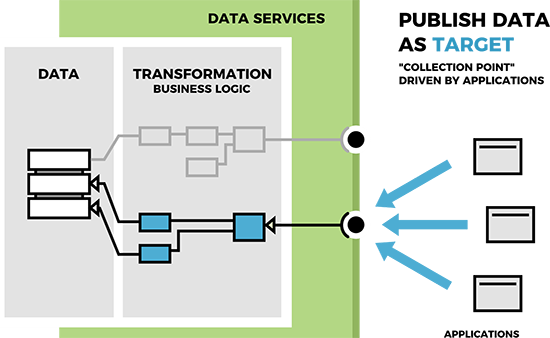

Option 2: Smart Data Collection Point

A second possibility - flip the direction of the data flow and look at the API as a collection endpoint.

Multiple systems can upload data through the endpoint for a CloverDX transformation to process. A request comes in carrying data, then your transformation graph crunches the parameters and the payload, and stores the result. Do you need transparent validation before storage? Do parts of that payload need to be transparently ‘broadcasted’ to multiple destinations, hiding such details from the uploaders? The collection endpoint has you covered in these scenarios.

Need to support different ways of feeding data into your central repository, e.g. to support multiple data formats from various clients? Publish a bunch of different collection endpoints, all pushing incoming data to the single location through different transformations.

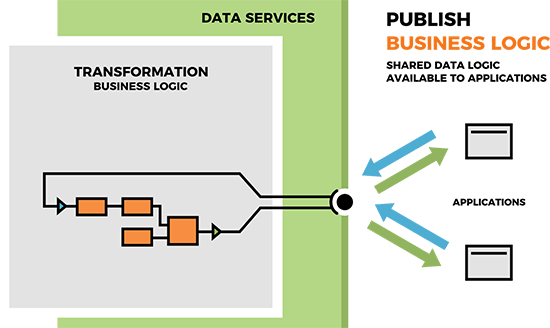

Option 3: Publishing Business Logic

Or a third option: ignore the data for a bit and think about the API as publishing the transformation graph itself.

In many data projects you build a bunch of components that others could use if those components were somehow available, shared. You can turn those components into subgraphs and share a repository of those. What if you wanted to share that logic in more of an ‘interactive’ mode? Pass a few records in, get a fast response back. With transformation graphs published as Data Services, systems can access their logic directly with very little overhead. And it works for all: that good old cron script using wget or curl, AJAX call from React or Angular front-end or call-out from your ESB or BPEL flow.

Building Data Flows Using RESTful API Calls

Data Services brings additional options for building data flows using RESTful API calls in CloverDX along with standard batch-based integrations you're already running.

Data Services opens the way for on-demand data integrations initiated by the consumer or uploader with little communication overhead where data transformation is still needed.

Our architectural thinking was mostly around automated intra-enterprise system-to-system communication where CloverDX sees most usage today. In terms of development we aimed to simplify and accelerate creation of RESTful services with JSON payloads, but also let you stay in full control over payload and HTTP sessions when needed.

On the administration side, all communication over Data Services endpoints can be secured using HTTPS (for now using only single certificate for all endpoints) and we have implemented health-checking and notification mechanisms for monitoring published endpoints.

Questions about Data Services?

If you want to know more about how you can use Data Services to quickly design processes and publish as REST APIs, to collect data from applications or provide data transformations as a service, just get in touch.

Deliver data to any destination: CloverDX - Publish